Off To The Third Dimension: Safe 3d Environment Perception from SICK Sensor Intelligence

March 24, 2023

Production and logistics processes are highly dynamic. The requirements on innovative safety solutions are increasing. Until now, 2D LiDAR sensors were used for two-dimensional hazardous area protection. The world’s first 3D time-of-flight camera with a Performance Level c safety certification, safeVisionary2, now opens up new safety applications with its three-dimensional protective field.

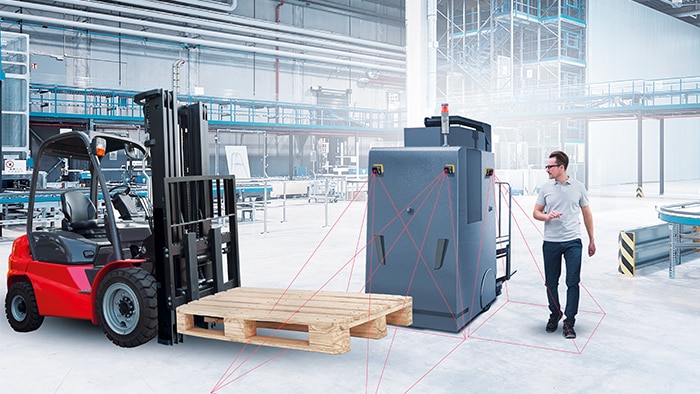

Extended protection and efficient automation of AGVs

Avoiding collisions and a fast restart increase the availability of Automated Guided Vehicles (AGVs) and mobile robots. Using safeVisionary2, obstacles in the direction of travel and above the scan field plane of a safety laser scanner are also safely detected and collisions avoided. In contrast to 2D anti-collision solutions, safeVisionary2 allows an automatic restart to be implemented in many cases. Thanks to the lateral protection, safety is also increased during the turning maneuvers of the vehicle after loading and unloading operations. Besides safety functions, the additional 3D ambient information can also be used for precise localization and navigation of the vehicles. The measurement data are available via Gigabit Ethernet for this purpose.

Improved human-robot collaboration through increased safety

In the environment of collaborative robots, the range of possible human movements presents a particular challenge for protection. Thanks to the safe 3D environment perception using safeVisionary2, it is also possible to safety detect the upper body and upper limbs of persons, and therefore risks such as reaching or bending over, within the hazardous area. This enables the safety distances to be reduced in many cases.

The camera has a further decisive advantage in the collaboration between humans and robots: The force and power limitation mode of robots is generally designed on the basis of the permissible force values for arms and hands while contact with sensitive body regions such as the face, skull and forehead is to be avoided. This makes it necessary to stop the robot when it comes in the immediate vicinity of personnel. safeVisionary2 allows the protection of the robot work area to be extended to human head height. A stop is then only necessary if a person actually moves their head into the work area.

Further usage of the sensor data is particularly suitable in this application scenario because the camera can perform many tasks from detecting empty pallets right through to object localization and measurement. Thanks to this combination of safety and automation, the cost of implementing additional components is reduced, which saves users money and time.

Safer footing for mobile service robots

When a mobile service robot is used in unstructured environments such as a shopping mall, an especially meticulous risk assessment must be undertaken. Obstacles such as steps or ramps can pose a potential fall risk to robots. Besides evaluating protective and warning fields for route protection, safeVisionary2 can also protect against fall edges. The precise 3D measurement data provide true added value, for example for contour-based navigation and other automation tasks.

Related Product

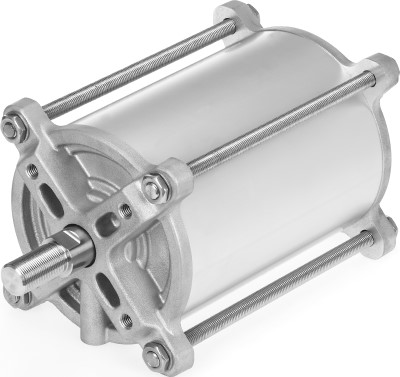

3D LiDAR Sensors MultiScan100 from SICK Offers Individual Configuration and Easy Integraton

The multiScan100 3D LiDAR sensor is a real multi-talent. Thanks to its high-resolution 0° scan layer, it is suitable for mapping and localization. The sensor generates a 3D point cloud that can be used to detect people and objects. It effortlessly detects fall edges and overhanging obstacles. This is how it reliably protects mobile platforms from accidents and failures. Thanks to its large working range, the sensor is also suitable for stationary applications. The multiScan100 can be individually configured and easily integrated. In addition to the device, there is a continuously growing modular software system with apps and software add-ons. A system plug for common interfaces ensures quick and flexible implementation.