Lighting Up the Quantum Computing Horizon with Aurora

January 28, 2025

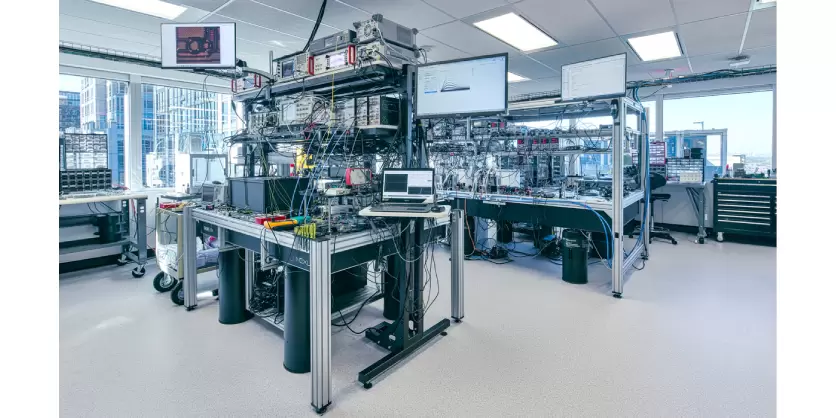

A peek inside Xanadu’s latest and greatest photonic quantum computer—Aurora.

By Zachary Vernon

The Xanadu team has recently announced the successful completion of Aurora, their latest photonic quantum computer. This machine follows in a line of successively more sophisticated earlier systems demonstrations, including X8 and Borealis. Both of those were ground-breaking in their own right: X8 brought Xanadu the first commercially cloud-deployed photonic quantum computer, and Borealis became one of the few machines in the world capable of demonstrating quantum computational advantage.

But Aurora is very different—and much more exciting. A full description of this system was just published in the peer-reviewed journal Nature. Here they summarize the main takeaways from this significant step forward in their rapidly-developing field of quantum computer hardware development.

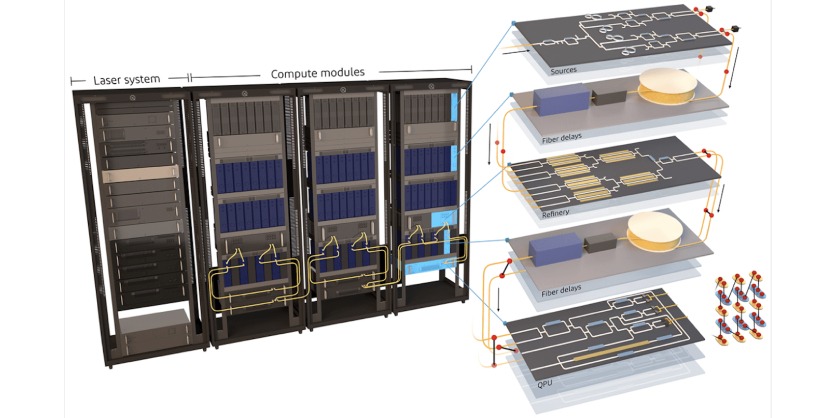

Pictured above, Aurora is a complete prototype of a universal photonic quantum computer following a design that will one day be able to perform fault-tolerant computation. It is the very first time that Xanadu —or anyone, for that matter—have combined all the subsystems necessary to implement universal and fault-tolerant quantum computation in a photonic architecture. Many earlier demonstrations of different building blocks towards this lofty goal exist, including Xanadu’s own X8 and Borealis systems, whose key underlying technologies are used within Aurora. However all such demonstrations lacked one or more vital features necessary for a practical machine.

Aurora, on the other hand, has it all: 35 photonic chips, networked together using a combined 13 km of fiber optics, carrying out all the essential functions needed by Xanadu’s comprehensive blueprint for fault-tolerant quantum computation. These include qubit generation and multiplexing, synthesis of a cluster state with both temporal and spatial entanglement, logic gates, and even real-time error correction and decoding operations that are executed within a single quantum clock cycle. All of the computation in this system happens within the confines of four standard, room-temperature server racks, fully automated and capable of running for hours without any human intervention.

The construction of Aurora is the outcome of a year of dedicated effort spanning nearly the entire Xanadu hardware and architecture teams, representing a convergence of many years of their innovations in photonic chip design, packaging, electronics, and systems design and integration. As described in their paper, they put Aurora through a set of rigorous benchmarks to showcase its many novel technological features. In one trial, they ran the system continuously for two hours and monitored the entanglement present in the cluster state over 86 billion modes—the largest number ever accessed in a context like this.

Quantum correlations were also used to verify that the single-clock-cycle decoding functions were working properly. This latter capability refers to the ability to make quantum measurements, then rapidly (using classical controllers, in their case FPGAs) detect errors and calculate and implement corrective quantum gates on the next quantum clock cycle. Doing this sequence of operations will be critical for all fault-tolerant quantum computers, and has never been demonstrated in a photonic machine before.

Xanadu’s paper detailing these demonstrations is entitled Scaling and networking a modular photonic quantum computer. They chose these words carefully to reflect the three major and closely related aspects of their architecture that Aurora substantially de-risks: scalability, networkability, and modularity. A truly useful quantum computer will require a very large number of physical qubits, necessitating an approach that includes a clear template for scaling up. Irrespective of the hardware approach, these qubits will not be able to fit into one contiguous system. Modularity is thus crucial: there must be a straightforward way to distribute the qubits amongst discrete modules that can be mass-manufactured independently.

And modularity is useless without the ability to network the modules together in a way that enables entanglement to be shared across separate chips. Aurora is a powerful demonstration of all three, and allows Xanadu to set aside any doubts about these aspects of their photonic architecture.

These scalability features are necessary for long-term success in quantum computing, but alone they are not sufficient. To make scaling up worthwhile, physical qubit performance must also be sufficiently high. Otherwise, error correction cannot work, and adding more qubits simply ends up making logical error rates even worse. Once component performance passes a level known as the fault tolerance threshold, this asymptotic trend flips, and adding qubits suppresses logical error rates, enabling algorithms to run for longer. This is necessary for all known high-value quantum computing applications, and ultimately required for their fledgling industry to deliver a positive return on investment.

In Xanadu’s photonic approach, crossing the fault tolerance threshold translates into driving optical losses down. When photons propagate through chip and fiber components, small imperfections, like material impurities or roughness in optical interfaces, can cause some of the light to be absorbed or scattered away. Lowering the degree of such losses in their components is largely all that stands in the way of a system like Aurora achieving fault tolerance. As part of this project, and as reported in Xanadu’s publication, they undertook a deep study of the optical loss requirements for their architecture, combining their latest optimizations to refine and update their architectural blueprints and derive precise quantitative loss budgets for all the optical paths in their system.

The results of this study give Xanadu a clear mandate for how to improve the performance of their components to reach the fault tolerance threshold. The chips used within Aurora were based on commercially available fabrication platforms that had not been specially optimized for this application. Meanwhile, Xanadu have been working with their foundry partners on customized fabrication processes that will satisfy the stringent performance demands of fault-tolerant operation. This mandate is now the sole focus of the Xanadu hardware and architecture teams, and much exciting progress has already been made at winning this war on loss. Stay tuned!

About Xanadu: Xanadu is a quantum computing company with the mission to build quantum computers that are useful and available to people everywhere. Founded in 2016, Xanadu has become one of the world’s leading quantum hardware and software companies. The company also leads the development of PennyLane, an open-source software library for quantum computing and application development.

More Information

Related Story

Government of Canada Supports Xanadu to Accelerate Quantum Computing Research and Education

Xanadu (xanadu.ai), a world leader in photonic quantum computing, received a repayable contribution from the Government of Canada, through the Federal Economic Development Agency for Southern Ontario (FedDev Ontario), to help companies advance and commercialize their quantum products. This funding, through the Regional Quantum Initiative (RQI), will accelerate the development of PennyLane, Xanadu’s open-source, cloud-based software framework for quantum machine learning, quantum chemistry, and quantum computing.